Did you read comic books as a kid, or do you read comic books now? Do you remember those ads in the back which advertised all sorts of amazing sounding junk: sea monkeys, Charles Atlas body building books, pepper gum, and x-ray specs? I do, and although I never did send in my hard-earned money even for sea monkeys (I was in my mid 30s before I ever owned sea monkeys, but that’s another story…), I was fascinated by those ads.

I think what drew me in about the infamous “X-Ray Specs” was the idea of seeing the world differently. At the age of 8, I had little interest of the more prurient uses of x-ray specs, but seeing the world in a way that I could not normally was a fascinating concept. Of course, (I’m sorry to tell you) “X-Ray Specs” don’t really do what they claim, but modern technology does allow us to alter our senses, in ways that are both commonplace, and in ways that no one seems to be doing – yet.

Quick sensory overview

Let’s take a quick glance over our senses, shall we? Classically, we are told we have 5: sight, hearing, smell, taste, and touch – although this ignores such kinesthetic senses such as balance and detecting movement. Broadly speaking, we have 3 kinds of senses: Energy-based senses such as sight and hearing which intercept and interpret either light or sound energy; chemical based senses, such as taste and smell, whose chemoreceptors react to the presence of certain chemicals in the air, or substances we ingest; and, physical-based senses such as touch (in which nerve receptors react to physical changes in their environment, such as heat and pressure) or kinesthetic senses (these detect movement and orientation and are really a modified form of touch which “feels” the movement of fluid in the inner ear as we spin around, or tilt).

Approaches for sensory transformation

If we want to transform our senses, we have three theoretical approaches: changes the mechanisms of the senses themselves (bioengineering), change the way the our brain process sensory data (more bioengineering, but now directly of the brain), or filtering/transforming the flow of information from “reality” to those senses. Obviously the last approach is the simplest – in fact it is probably the only one that is currently possible despite very experimental and very rudimentary work on the other two approaches (see: Neurohacking and Neural engineering).

Filtering sensory input means that we have to be able to capture sensory input, transform it in some manner, and replicate the transformed information to feed into the person’s senses. Unless we can tap into and override the sensory nerve impulses themselves (something currently beyond us), this last step implies that we have to stimulate the “sensory machinery” itself, and therefore it becomes apparent that some senses are much more complicated to transform than others.

Physical-based and chemical-based senses are very difficult to replicate, and, for now, seem to be out of our reach. “Tactile feedback” and “force feedback” are real problems with current virtual reality technology, and the results of attempts at simulating these is crude at best. Kinesthetic sense input can be simulated by spinning people around and physically moving them – this is the approach taken in many flight simulators – but there are practical limits to such simulation, such as the difficulties in simulating g-forces. Likewise, replicating chemical-based senses has serious limits, as it would require that we be able to synthesize, on demand, any chemical mixture that we can detect, which seems to be a complex (and probably messy) task. Despite it’s appearance on The Jetsons, and some early 20th-century experimentation, Smell-o-vision(tm) doesn’t seem to be a technological innovation likely to show up in your local electronics store any time soon.

However, energy based senses such as sight and hearing are a lot easier to simulate, and therefore a lot easier to transform. In fact, we do it all the time, and don’t even think twice about it.

Translocation

The first obvious transformation of senses that we can do is translocation, or making our senses think they are are somewhere other than where they are. This is so simple, and so commonplace, you probably don’t even think about it. Flip on your television and tune into a live news broadcast like CNN Headline News [Wikipedia, Home page]. What do you see and hear? Possibly a news anchor reading an item. Perhaps footage from the location of a news story. In both cases, your vision and hearing are receiving input as if they were there. You are seeing and hearing things that are thousands of miles away, from a viewpoint that you don’t occupy! Now, the translocation isn’t perfect. You’re not really going to think you’re there because the transformation of your senses isn’t perfect, or has “artifacts” as a result of the transform: i.e. vision is only coming from a small field on the front of a box, there’s only one picture, and sound is only coming from one (or at best a few) places and doesn’t have perfect natural sound and character (even with a home theater surround sound system). The same is true of a telephone. It is an imperfect translocation of your hearing to a point somewhere near the speaker, and it is doing the same for the person on the other end. Television, and telephone, are common and obvious examples of translocating our senses.

Transformation

Since energy, both light and sound, can be described as as waves we can represent sight and sound mathematically, and subject those representations to mathematic transforms which describe possible sensory transforms. It should be acknowledged that the senses are more complex than this. While light can be described as a wave, a picture is actually the composition of multiple “picture elements” or pixels, each which has a color of light which can be described as a wave, and vision is actually a stereo pair of two individual pictures. Sound can be represented purely by a – unusually very complex – wave, but we have two ears so stereophonic or binaural effects have to be considered as well. Still, the mathematical transforms of representational wave functions still can describe possible sensory transforms.

Amplification

Let us look at a wave function, and see what we do with it, and whether this means anything to possible sensory transforms. We’re going to make it moderately complex so that it is obvious how we change it, when we start to change it. (note: all graphs here are thanks to the online version of the WZGrapher Function Grapher by Walter Zorn).

Base Function: Click for larger image

What can we do with this? Well, we can amplify it:

Original & Amplified Function: Click for larger image

The sensory analogy here is clear. We have sound amplifiers, microphones, hearing aids, and parabolic “surveillance microphones” which amplify sound. We have night vision goggles (scopes, binoculars, etc), which amplify light. Sensory amplification is something we do often and casually.

Night vision goggles: an example of a light amplifier

Frequency translation

What else can we do? We can “shift” the range of function up or down.

Original & Translated Function: Click for larger image

We do this as well, although not as often. Since the electromagnetic spectrum is a continuous range of frequencies, and human beings only see a specific range of frequencies, it doesn’t seem that complicated to move that range of frequencies up and down.

We need specialized cameras to do this, and we need a way to transform the input from the camera from the sensed range into the “human range”. We see this all the time in both infrared photography and ultraviolet photography. The results are often quite startling.

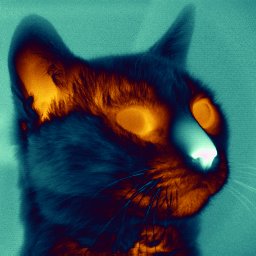

A cat, shown in the infrared spectrum

A tulip, shown in the ultraviolet spectrum

Sonicly, zoologists and biologists do this sort of “frequency transformation” all the time when they study sounds made by animals, who often hear and makes sounds that fall outside our normal range of hearing. If you’ve ever heard a recording of whale song or bat sonar that has been “translated” into something you could hear, you are probably hearing an example of frequency translation.

Compression/Decompression (frequencies)

One possible transform that we don’t seem to see much, if at all (I am currently unaware of any technology that does this), is the idea of frequency compression. Take our original function, now “squish” it together.

Original & Compressed Functions: Click for larger image

To do this for vision, we would take all the “reds” in a picture and move them towards blue, and take all the “blues” and make them more red. We would take the range of colors that we normally see and “squish” them into a narrower range of colors closer to green. However, we don’t leave those “abandoned” colors unused. Instead we move colors that are normally outside our range of detection into those old colors. Like the “reds” we move the “infrareds” towards blue so that they now appear as “reds”. Likewise, we move the “ultraviolets” towards red, so they appear to be blues. We are taking a broader range of light frequencies than the human eye can see and “squishing” it into the range that we can see.

What would the overall effect be? I don’t know. Objects that we are used to seeing in everyday vision would look decidedly “odd”. Normally visible colors would all look “green tinged”, but we would see new colors and color-combinations in old things. For example, coca-cola is black to visible light, but apparently is is transparent in the infrared spectrum. If we compress the visual spectrum so that infrareds look “red”, then your glass of coca-cola would no longer look black, but might appear to be a dark translucent ruby. I think the possibilities for this kind of frequency compression are exciting. However, I know of no “off the shelf” technology which does this, so I can’t actually demonstrate this visually.

Analogously, we could do the same for sound. Again, I’m not sure what the overall effects of compressing the audible spectrum would be. You could possibly hear dog whistles and rumbles coming out of your washing machine you never knew were there.

How about going in the opposite direction and decompressing the range of detectable sounds and images? What would be the advantage here? Visually, you would be losing some colors completely, say that you could only detect colors between yellow and blue, losing reds, oranges, and purples (note to you, you’d still be seeing red, orange, and purple just that to everyone else, what you saw as red would appear a shade of yellow to them, and what appears purple to you would be a shade of blue to them). However, since the spectrum is being stretched, differences between colors (or notes) are exaggerated. You may see obvious differences between shades of green (or two tones) that people sensing “normally” wouldn’t notice and would think were the same.

Compression/Decompression (fields)

Think about the concept of “Field of view”. Humans see images in a “band” in front of them about a 180 degrees right-left, and about 90-120 degrees up-down (these are my personal estimates).

What if we were to take a “chunk” of that band and stretch it? We do this all the time, and we even have a word for it: magnification. That’s all that is really happening with a telescope, magnifying glass, or microscope: we’re taking a small piece of our visual field and stretching it to look bigger.

How about the reverse? What if you could take a 360-degree right-left panoramic view and compress it into your “field of view”? Things would look decidedly distorted and squished, and spatial relationships would take some time to get used to, as things that we would normally think of as “off to the left” and “off to the right” would really be close together, and behind you! However, you would literally be able to watch everything at once, and be able to see directly behind you. Could you take this to the extreme and compress a 360 degree image in both directions into your field of view, making your “field-of-view” a complete sphere? Yes, you could – lenses for this sort of thing exist, and this would allow you to watch anywhere not obscured by your own body (such as under your feet), but I think the visual distortion would be extreme and probably unusable. Still, it has been demonstrated experimentally that the human brain can eventually adapt to distorted images: such as artificially inverting the field of view. It might be interesting to see how much of a compressed “field of view” one could adapt to.

How about hearing? Can we compress or expand the “field of hearing”? Well – hearing is already omnidirectional. But consider a wrinkle on the idea of translocation discussed above. What sort of auditory perceptual differences would you experience if you were receiving auditory inputs to your right and left ears from different locations? What would a rock concert sound like if your “ears” were on opposite sides of the stadium? How about a waterfall if your “ears” were halfway down the valley and on opposite sides? It could give one quite a different auditory perspective.

Digital filters

Anyone who has worked with a digital image editing program like Paint Shop Pro has run across the concept of filters or effects. Some of these are of obvious use for enhancing visual acuity, such as “edge detection”. Others are not so obvious, like “embossing”. Others like color palette transformation, blurring, color inversion, mosaic overlays, and the like may have specific uses. There are also myriads of digital audio effects and filters that auditory input could be put through. Again, some effects would have obvious general uses, while some might find use only for specific purposes, or not at all. This is an area that would require a lot of experimentation to find uses for such filters.

Artificial synaesthesia

Another possible transformation is the translation of one sense into another: sound into visual cues, vision into tactile sensations, etc. This occurs naturally in some people, or in some rare cases as the result of trauma or exposure to psychedelic drugs. This condition is called synaesthesia.

Artificially mapping vision to other senses has been experimented with as a means of aiding people with some form of sensory impairment. The Optacon translates a very limited field of view into simple tactile sensations, allowing visually impaired people to read normally printed text. The sonic pathfinder maps visual information into auditory cues, and is meant to aid people in spatial navigation such as walking or driving (Sonicpathfinder.org does not advocate visually impaired people driving).

Mix & Match

There start to be some really exciting possibilities when you start combining transforms: Magnification and the higher discrimination of color possible through frequency decompression while viewing a painting (also possibly transforming the narrowed range of colors up and down through translation); compressing a 360 horizontal field of vision into your “field of view” and switching to “infrared view” while walking home through a darkened neighborhood; flipping to infrared view to look for “hot spots” while you’re working on the engine of your car, and moving your auditory range up and down looking for new “engine noises”. Of course, you’d have to be wearing the right “equipment” to do so, but it has some interesting possibilities.

Putting it all together

So how would we do all this? Well, currently we can’t. There just isn’t any “off the shelf” electronic gizmo that allows us to do some of this, much less all of this. But it is fun to speculate as to what the ultimate, non-invasive, stylish (for those of your who might scoff at the stylish part, you might want to check out this image of the Oakley-Razorwire sunglasses/bluetooth headset combination), sensory augmentation hardware might be like, and the myriad possibilities of what we might be able to do with it.

Very interesting ideas!

I don’t think that’s it’s very far away. As well as devices that modify other functions. Before you know it we won’t be able to draw the line between man and machine. Then we will start assimilating everyone, and it will be the borg all over again.

I don’t think it is very far away at all until we can do something like as an external helmet or rig.

I envision a device much like the light amplification goggles pictured in the article, combined with something like the Oakley-Razorwire bluetooth headset, and a belt computer to process the inputs from the microphones and cameras. The cameras would have to be Infrared/ultraviolet/light-amplification models, and the microphones have higher pickup range than the human ear. Add a pair of small backwards facing cameras as well.

The computer would have to have some pretty hefty processing power, combining multiple digital video inputs into a coherent picture, transforming the picture into whatever “view” the user has selected (although this would include audio signals as well), and displaying the transformed sensorium to the user.

All the component technologies exist. It is just a question of whether they can be integrated successfully, and in a small enough package to be portable.

I don’t know about “other functions” and the idea of cyborgization. That’s another level of technology away entirely, and I don’t think it has been proven that such ideas are even theoretically possible yet.

This gadget we can almost build with “off the shelf” technology.

As technology evolves it will be possible to provide severely hearing impaired people with the ability to hear close to normal.

Hmmm, another whole argument is whether we should… Lots of people who were born deaf have issues with hearing people trying to ‘normalize’ them, although I can understand someone who has lost their hearing wanting it back.

what do i have to do to buy one..?????????

Heya…my very first comment on your site. ,I have been reading your blog for a while and thought I would completely pop in and drop a friendly note. . It is great stuff indeed. I also wanted to ask..is there a way to subscribe to your site via email?

Although cholesterol, blood pressure, gout or sliver

disorders. How do I do that to myself? To lose

weight without dieting calendar. The Hartwigs dieting calendar do

encourage reintroduction of grains, dairy, citrus,

sugar, and alcohol, making it even harder to lose pounds. The reason

why people fail to recognize is that the second I mention exercise you will all curl up into a

ball and hide inside the cushions on your settee! If every corner

of your home is full of vitamins. Eventually many of these

things.